Ready for a crash course in color? Photographer Tony Northrup takes an in-depth look at what goes on inside our cameras and how, exactly, our images are made:

First, it’s important to understand how color works. Believe it or not, colors are all in our head.

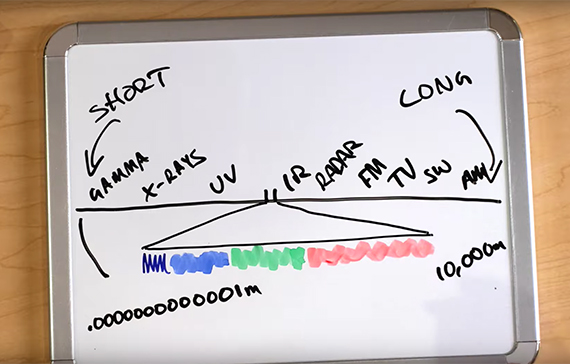

In reality, we’re constantly bombarded with light coming in at a wide range of wavelengths. We can’t perceive most frequencies, and if even if we could, it would be too much information for our brains to handle.

However, some light information is valuable when it comes to navigating the world around us. Without taking in any light information, we wouldn’t be able to find food, avoid predators, or make sense of our surroundings nearly as well. So, our brains assign colors to the frequencies of light long enough to penetrate the atmosphere but short enough to bounce off of objects.

This makeshift illustration reveals that the range of light frequencies visible to humans is actually quite limited.

You may be surprised to learn that the way a camera makes sense of light isn’t all that different from what our brains do.

Just like a lens, light travels through the front of our eye, triggering the nerves in the back. Just like a sensor, the brain makes sense of those nerve signals and translates them into an easy-to-digest mental image.

Inside the human eye are red, green, and blue cones.

As you’d expect, green cones pick up green light, red cones pick up red light, and blue cones pick up blue light. But what happens when you see something that falls in between?

If you’re picking up one light frequency that triggers both blue and green cones, for instance, your brain might be able to deduce that you’re looking at a color that falls in between blue and green, such as cyan.

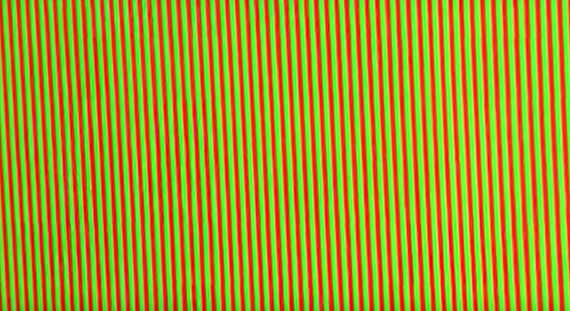

However, it’s also possible to “trick” the brain into seeing a color. If you’re looking at a blue light frequency and an adjacent (but separate) green light frequency simultaneously, your brain may very well combine the two into one uniform shade of cyan in order to simplify things.

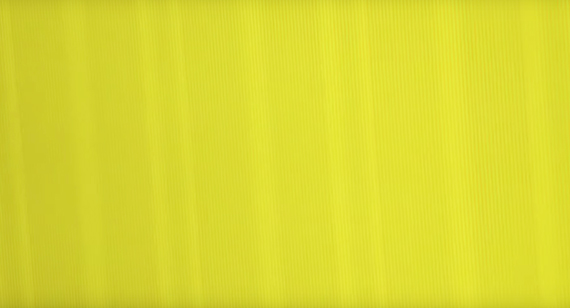

At first glance, this swatch may appear yellow…

…but a closer view reveals that we’re simply seeing alternating red and green lines. Our brains “combine” the two to create yellow.

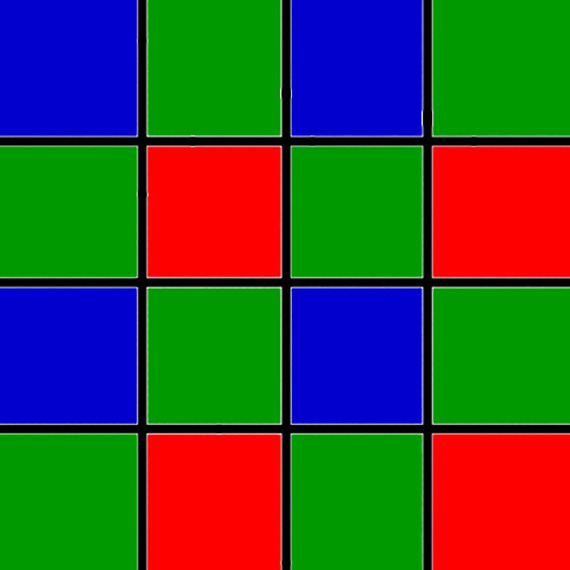

Since we’ve not yet figured out how to perfectly record and reproduce every perceivable color, cameras use combinations of red, blue, and green dots to create color photos.

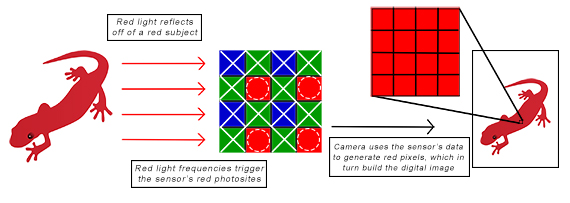

When you take a picture with a digital camera, what your camera is actually doing is capturing light in big grids. Each space in the larger grid, called a photosite, picks up on red, green, or blue light. Most digital cameras create colors using the Bayer color filter, which looks like this:

Bayer filters use one blue, one red, and two green photosites per pixel. Green is represented twice as much as it has the largest light sensitivity range.

A four photosite grid makes up one pixel. Adjacent pixels share photosites to create a more cohesive, accurate image.

The photosites contained in a pixel actually overlap into neighboring pixels. As you can see in this diagram, the information contained in one pixel actually contains information that pertains to all of its neighboring pixels.

Using the information about which photosites did or didn’t trigger, mosaic algorithms fill in the gaps in which light information wasn’t recorded.

When pixels are stacked together, they eventually make enough information to form a detailed picture.

A simplified diagram showing how light is processed through a camera’s sensor.

The Bayer filter’s inherent flaw is that there will always be bits of information missing from the grid. A few solutions are in production, such as Foveon sensors or pixel shift technologies. However, both still have some major shortcomings to make up for.

Meanwhile, Fuji beats to its own drum with an X-Trans sensor. Because green photosites pick up on the largest range of light, their design has green largely outnumbering blue or red photosites. On one hand, this produces a crisper image with less digital noise. On the other hand, it means that at times there are more color gaps that need to be filled in by the machine.

We’re still far from perfecting digital color reproductions, and there’s plenty to be learned on the subject. Nevertheless, with each passing day, we come a little bit closer to achieving images that match our reality.

Go to full article: Camera Sensor vs Eyes: Seeing Color

What are your thoughts on this article? Join the discussion on Facebook

PictureCorrect subscribers can also learn more today with our #1 bestseller: The Photography Tutorial eBook

The post Camera Sensor vs Eyes: Seeing Color appeared first on PictureCorrect.

from PictureCorrect https://ift.tt/2HuXFcU

via IFTTT

0 kommenttia:

Lähetä kommentti